What started as a simple question from a co-worker turned into a rabbit hole exploration session that lasted a bit longer than anticipated. ‘Hey, I need to upload a report to SharePoint using Python.’

In the past, I’ve used SharePoint Add-in permissions to create credentials allowing an external service, app, or script to write to a site, library, list, or all of the above. However, the environment I’m currently working in does not allow Add-in permissions, and Microsoft has been slowly depreciating the service for a long time.

As of today (March 18, 2024) this is the only way I could find to upload a large file to SharePoint. Using the MS Graph SDK, you can upload files smaller than 4mb, but that is useless in most cases.

For the script below, the following items are needed:

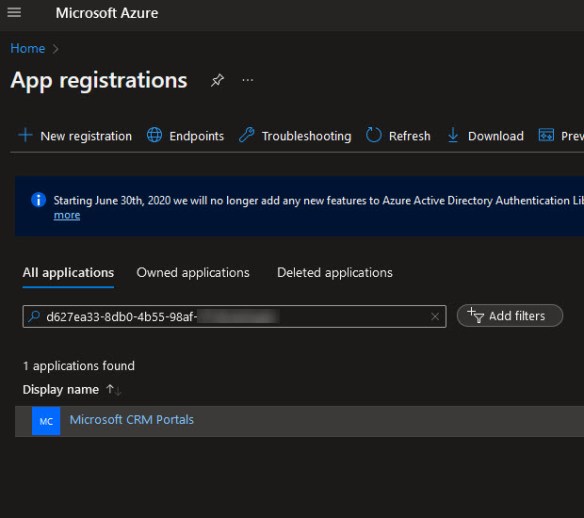

Azure App Registration:

Microsoft Graph application permissions:

Files.ReadWrite.All

Sites.ReadWrite.All

SharePoint site

SharePoint library (aka drive)

File to test with

import requests

import msal

import atexit

import os.path

import urllib.parse

import os

TENANT_ID = '19a6096e-3456-7890-abcd-19taco8cdedd'

CLIENT_ID = '0cd0453d-cdef-xyz1-1234-532burrito98'

CLIENT_SECRET = '.i.need.tacos-and.queso'

SHAREPOINT_HOST_NAME = 'tacoranch.sharepoint.com'

SITE_NAME = 'python'

TARGET_LIBRARY = 'reports'

UPLOAD_FILE = 'C:\\code\\test files\\LargeExcel.xlsx'

UPLOAD_FILE_NAME = 'LargeExcel.xlsx'

UPLOAD_FILE_DESCRIPTION = 'A large excel file' #not required

AUTHORITY = 'https://login.microsoftonline.com/' + TENANT_ID

ENDPOINT = 'https://graph.microsoft.com/v1.0'

SCOPES = [

'Files.ReadWrite.All',

'Sites.ReadWrite.All'

]

cache = msal.SerializableTokenCache()

if os.path.exists('token_cache.bin'):

cache.deserialize(open('token_cache.bin', 'r').read())

atexit.register(lambda: open('token_cache.bin', 'w').write(cache.serialize()) if cache.has_state_changed else None)

SCOPES = ["https://graph.microsoft.com/.default"]

app = msal.ConfidentialClientApplication(CLIENT_ID, authority=AUTHORITY, client_credential=CLIENT_SECRET, token_cache=cache)

result = None

result = app.acquire_token_silent(SCOPES, account=None)

drive_id = None

if result is None:

result = app.acquire_token_for_client(SCOPES)

if 'access_token' in result:

print('Token acquired')

else:

print(result.get('error'))

print(result.get('error_description'))

print(result.get('correlation_id'))

if 'access_token' in result:

access_token = result['access_token']

headers={'Authorization': 'Bearer ' + access_token}

# get the site id

result = requests.get(f'{ENDPOINT}/sites/{SHAREPOINT_HOST_NAME}:/sites/{SITE_NAME}', headers=headers)

result.raise_for_status()

site_info = result.json()

site_id = site_info['id']

# get the drive / library id

result = requests.get(f'{ENDPOINT}/sites/{site_id}/drives', headers=headers)

result.raise_for_status()

drives_info = result.json()

for drive in drives_info['value']:

if drive['name'] == TARGET_LIBRARY:

drive_id = drive['id']

break

if drive_id is None:

print(f'No drive named "{TARGET_LIBRARY}" found')

# upload a large file to

file_url = urllib.parse.quote(UPLOAD_FILE_NAME)

result = requests.post(

f'{ENDPOINT}/drives/{drive_id}/root:/{file_url}:/createUploadSession',

headers=headers,

json={

'@microsoft.graph.conflictBehavior': 'replace',

'description': UPLOAD_FILE_DESCRIPTION,

'fileSystemInfo': {'@odata.type': 'microsoft.graph.fileSystemInfo'},

'name': UPLOAD_FILE_NAME

}

)

result.raise_for_status()

upload_session = result.json()

upload_url = upload_session['uploadUrl']

st = os.stat(UPLOAD_FILE)

size = st.st_size

CHUNK_SIZE = 10485760

chunks = (size + CHUNK_SIZE - 1) // CHUNK_SIZE

with open(UPLOAD_FILE, 'rb') as fd:

start = 0

for chunk_num in range(chunks):

chunk = fd.read(CHUNK_SIZE)

bytes_read = len(chunk)

upload_range = f'bytes {start}-{start + bytes_read - 1}/{size}'

print(f'chunk: {chunk_num} bytes read: {bytes_read} upload range: {upload_range}')

result = requests.put(

upload_url,

headers={

'Content-Length': str(bytes_read),

'Content-Range': upload_range

},

data=chunk

)

result.raise_for_status()

start += bytes_read

else:

raise Exception('no access token')

In the script, I’m uploading the LargeExcel file to a library named reports in the Python site. It is important to note that the words drive and library are used interchangeably when working with MS Graph. If you see a script example that does not specify a target library but only uses root, it will write the files to the default Documents / Shared Documents library.

Big thank you to Keath Milligan for providing the foundation of the script.

https://gist.github.com/keathmilligan/590a981cc629a8ea9b7c3bb64bfcb417